Whether or not those claiming that cyberwar poses a serious threat to U.S. national security are providing sufficient evidence to back their claims has been a bone of contention in public discourse about cybersecurity. As part of our effort to document and analyze shifts in U.S. cybersecurity discourse, we are coding the Cyberwar Discourse Event (CDE) documents that we collect for the types of evidence that are deployed in support of the claims being made. Additionally, we are categorizing those documents by the type of individuals and institutions created them.

For example, evidence codes include “expert,” “quantitative,” “simulation or war game,” “historical events & analogies,” and “hypothetical scenarios, thought experiments.” Example institution categories include “government docs,” “op-eds” (written by influential policy makers, military leaders, etc.), “industry reports,” “bill” (e.g. legislation), and “think-tank report.”

Part of what we hope to accomplish in this project is to detect patterns in the overall U.S. cybersecurity discourse that may have gone unnoticed. We also hope to uncover how the discourse has changed over time. It seems that even some admittedly very preliminary analysis and visualization has uncovered an interesting pattern related to the use of evidence by various types of institutional actors.

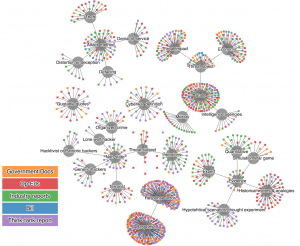

Dr. Lauro Lins, a Postdoctoral Research Associate in the University of Utah’s Scientific Computing and Imaging Institute, created a visualization using preliminary data gathered from only about 20 of the roughly 80 CDE documents that we have collected and coded thus far. While much of the graph does not show much that is interesting, a clear pattern emerges in the area of evidence (bottom right of the graph).

What we can see is that certain types of institutional publications tend to rely more on certain types of evidence than others. Text segments are clustered around the types of evidence with which they were coded as we read through each of the CDEs. They are color coded based on the type of institutional publication from which they were extracted.

We can see that industry reports like those issued by companies such as McAfee or Symantec have tended to make more use of quantitative forms of evidence. Op-eds written by policy makers, military leaders, or other influential voices in the cybersecurity debate have tended to rely more upon references to historical events (e.g. references to prior cyberattacks like Estonia or Georgia) or historical analogies (e.g. the seemingly ever-present analogies to Cold War nuclear deterrence). Finally, the think-tank reports analyzed at the time of this visualization tended to make more use of hypothetical scenarios and thought experiments when making the claim for a serious cybersecurity threat.

Again, this analysis is very preliminary and is based on the coding of only about a fourth of the documents that have been collected and coded to this point. Thus, we will be interested to see if this pattern holds up when the rest of the data is included. Nonetheless, there are a couple of important points that can be made even at this point:

- Though many (including myself) have felt that the current cybersecurity discourse is lacking in its reliance upon evidence, it is clear that this is not entirely the case. Many of those statements that can legitimately be considered representative of the ongoing debate, or which have the potential to influence and shape that debate, do deploy some kind of evidence to support their claims.

- The issue over whether or not enough evidence is being provided may stem from a tendency for different groups to favor different kinds of evidence. As such, it could be the case that when members of one group listen to the arguments of another group that favors and deploys a different kind of evidence, the first group may not recognize that evidence is being provided at all.

As someone who has studied the history and sociology of the natural sciences, this is not surprising. Even in the sciences, we see that different disciplines can have radically different notions of what counts as “evidence.” It seems reasonable that the same phenomenon would be at work in such a diverse area as cybersecurity, where not only scientific and technical experts from various disciplinary backgrounds are interacting with one another, but where social scientists, humanities scholars, policy analysts, military professionals, and policy makers are also thrown into the mix.

In a piece that I wrote for The Firewall blog at Forbes.com back in October, I addressed the issue of who gets to have a voice in public debates about highly technical policy matters such as cybersecurity. I argued that

“No one person or group of people will have all the knowledge necessary to ‘know if government is screwing up’. Rather, multiple people with multiple skill sets and areas of expertise, all looking at the same problems from their various perspectives, will give us an idea about the wisdom of government decision making on cybersecurity (or any policy, for that matter).”

I still believe that to be true. But one lesson that might be emerging from the preliminary results presented here is that for such a diverse group of people to have an effective discussion about such an important and complex issue of like cybersecurity, the parties to that discussion must 1) recognize that those from different disciplinary and institutional backgrounds will likely deploy different kinds of evidence in support of their claims and 2) recognize both the strengths and limitations of the various types of evidence being deployed.

January 25th, 2011 at 4:44 am

[…] This post was mentioned on Twitter by seanlawson and Sandro Süffert, projectcywd. projectcywd said: #Cybersecurity Discourse and the Use of Evidence: Some (Very) Preliminary Results http://bit.ly/euLmHs […]